The AI market is no longer about “which chatbot is best.” It is now defined by two distinct, fast‑moving races: reasoning (how well a system actually thinks through problems) and deep research (how well it explores, verifies, and synthesizes information across large bodies of data). The newest flagship models from OpenAI, Google, xAI, and others make this split clearer than ever.

Reasoning: The Newest Frontier Engines

Reasoning quality is no longer a commodity; the latest models show wildly different trade‑offs in depth, speed, and cost.

OpenAI’s newest frontier stack (including GPT‑5.2 and its extended reasoning modes) pushes the edge on multi‑step logic, coding, and long‑context problem solving. These models are designed to run multi‑hour workflows, maintain coherence over hundreds of thousands of tokens, and support agentic behavior like planning and tool use at production reliability levels.

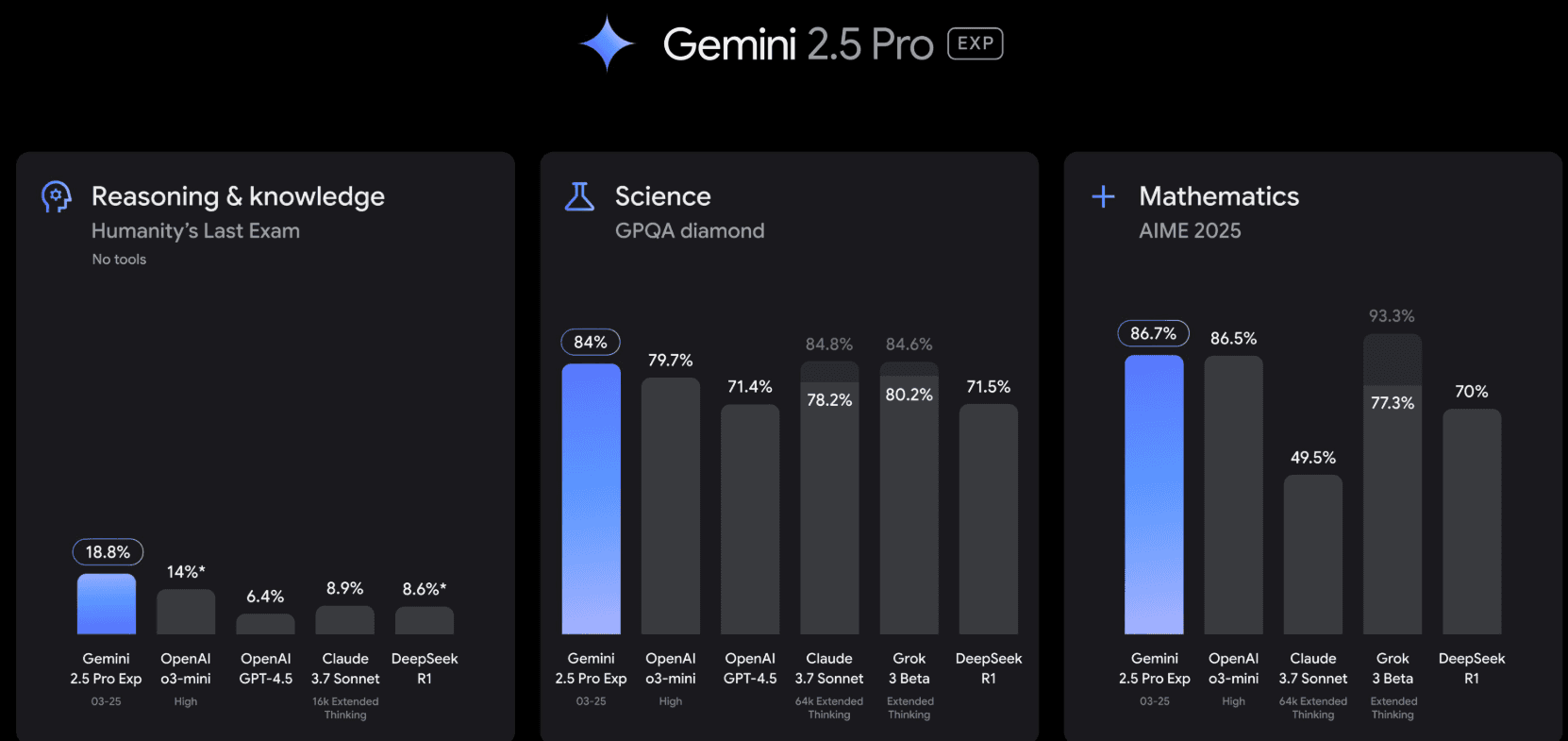

Google’s Gemini 3, paired with Gemini 2.5 Pro and Flash, focuses on huge context windows and tight integration with Docs, Gmail, Drive, and Android. It is excellent for structured reasoning that needs to live inside workflows, but it can still show rough edges on very deep, formal proofs compared with OpenAI’s top reasoning modes.

xAI’s latest Grok 4.1 family (including Fast and Thinking variants) is optimized for speed, long context, and live tool‑calling. It excels at “real‑world” reasoning over streaming and social data, but its personality‑forward behavior and aggressive speed can still lead to occasional shortcuts in validation.

The headline: fluent text is easy; robust reasoning under constraints is not. The newest models prove that “sounding smart” and “being correct under pressure” are now separate product decisions, not just scaling decisions.

Deep Research: From Feature to Product Layer

Deep research has quietly become its own product category, distinct from raw language modeling.

OpenAI’s newer research stack couples its latest reasoning models with autonomous retrieval, source expansion, and multi‑pass synthesis. It is tuned for use cases like due diligence, financial and technical analysis, and complex literature review, and is priced and positioned accordingly for professional and enterprise workflows.

Google’s upgraded Deep Research, powered by Gemini 2.5 Pro and rolling into Gemini 3, is deeply embedded into Workspace: it can read your Docs, Sheets, Drive, and email, orchestrate multi‑document research plans, and generate structured outputs (briefs, matrices, timelines) directly where teams already work.

Perplexity and similar “research‑first” assistants lean hard into multi‑source exploration, citation quality, and fast, opinionated summaries. They often blend different vendors’ reasoning models underneath a research‑oriented UX: concise answers, visible citations, and one‑click deep dives instead of open‑ended chat.

xAI’s Grok research modes emphasize speed and live‑web monitoring, especially around news, markets, and social discourse. They are well suited for situational awareness, but still benefit from human oversight when used as the primary engine for deep, high‑stakes investigation.

These systems act less like chatbots that occasionally browse and more like junior analysts: they plan, search, rank, and then synthesize, with explicit trade‑offs between coverage, speed, and rigor.

Why Reasoning and Research Are Splitting

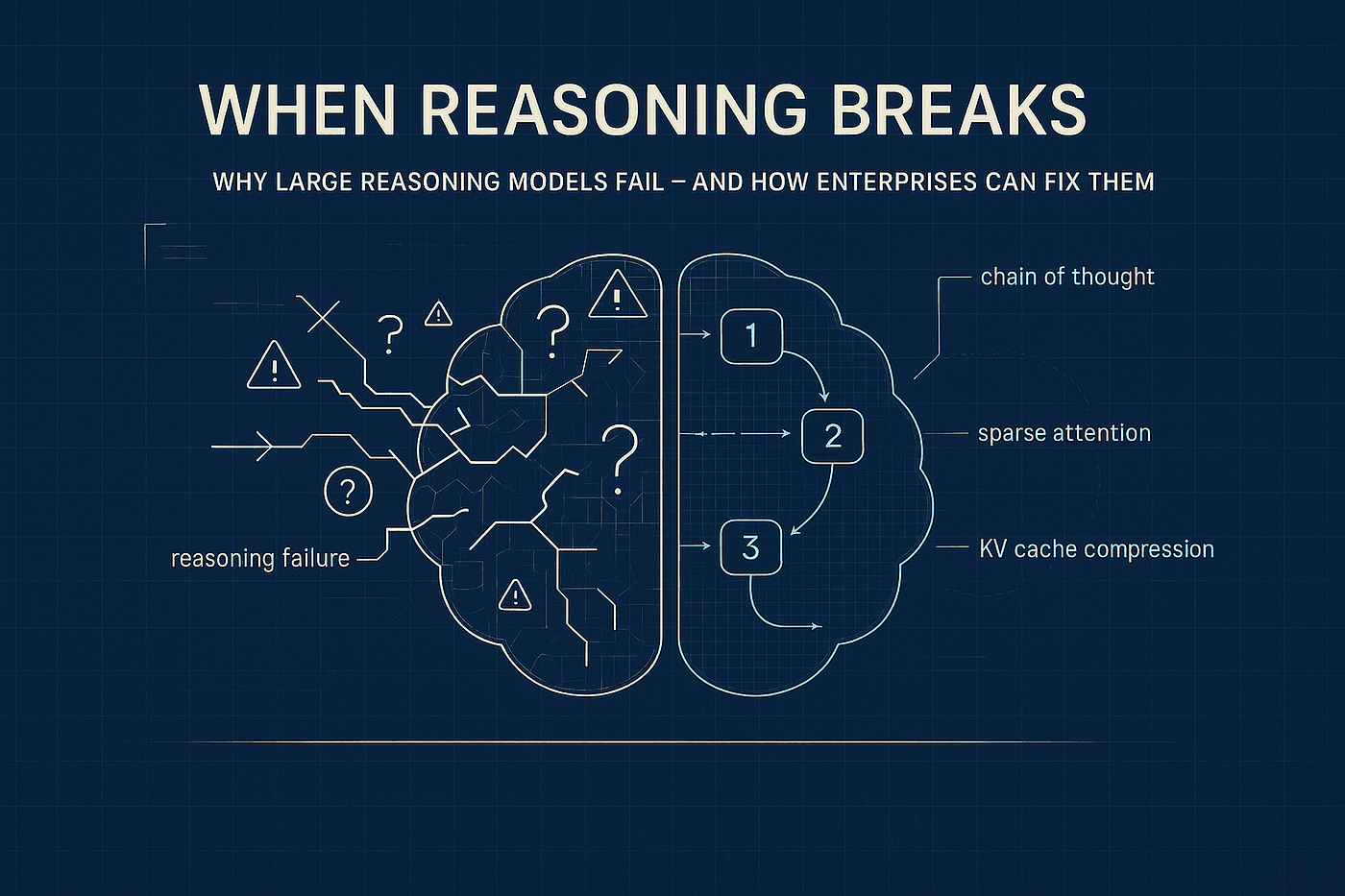

Across the newest models, three structural shifts explain the separation between reasoning and research:

Architecture specialization

Providers are optimizing different parts of their stacks for different tasks: some models are tuned for planning, code, and formal reasoning; others for retrieval, ranking, and summarization. Trying to make one model do everything usually means compromising on at least one of these.Divergent cost curves

Deep research workloads stress retrieval infrastructure (crawling, indexing, embedding, ranking), while reasoning workloads stress inference (long context, many steps, tool calls). This leads to different economics and pricing strategies, which in turn push vendors to separate offerings.Different failure modes

Reasoning failures look like wrong proofs, brittle plans, or unsafe actions. Research failures look like shallow coverage, missing key sources, or hidden bias in what gets retrieved. Treating both as “just chat” hides these very different risks.

For founders and builders, the implication is simple: using “whatever default model is in the SDK” is now an architectural risk, not just a convenience.

How to Choose: Intent Before Vendor

With the newest models, the right question is no longer “Which model is best?” but “What is this system actually doing?”

If your product must deliver accurate, defendable long‑form research (e.g., investor memos, legal and regulatory scans, deep market maps), you want a deep‑research‑first engine tied to a strong reasoning model, even if the cost per query is higher.

If you are building agents that plan, code, and reason under constraints (e.g., dev tools, operations copilots, autonomous workflows), you want the strongest reasoning model you can afford, and you can afford to mix it with a cheaper retrieval layer.

If your users need fast, socially aware insights (e.g., news surfaces, sentiment monitors, trading dashboards), a model like Grok 4.x or fast Gemini modes might fit, but with clear guardrails and human‑in‑the‑loop verification.

If your goal is broad access across a team (internal copilots, general knowledge tools), cost‑efficient research‑oriented assistants that sit on top of multiple models may give better ROI than a single expensive frontier model.

There is no single “winner” because the workloads are diverging. The winning products will be the ones that know when to pay for deep reasoning, when to pay for deep research, and when “good enough” really is enough.

How to Architect for the Split

To build durable AI products on top of these newest models, treat reasoning and research as different layers in your system:

A reasoning layer that:

Chooses goals, breaks them into steps, decides which tools to call.

Enforces constraints (policy, safety, compliance).

Evaluates competing hypotheses and decides when a result is “good enough.”

A research layer that:

Finds, filters, and ranks sources (web, internal docs, databases).

Tracks provenance and citations.

Offers multiple “views” of the same problem: short brief, deep dive, tables, timelines.

These layers can use different vendors and different models. In many cases, the best design will be: one frontier reasoning model, plus a more cost‑efficient research assistant, plus your own domain data and retrieval.